15. Juni 2018

Automated testing of a Business Central Extension V2 in TFS

One of the requirements of publishing a Business Central app (extension V2) into AppSource is an automated test package. That obviously makes a lot of sense but how to get there is not an entirely clear path. Microsoft shares their example code and some guidelines here but creating the test code is only half of the story as actually running the tests and acting on the results is what brings the benefit. In the following post you will see how running those tests on a fixed schedule or trigger-based in TFS works, how the results are presented and how you can then work with them

The TL;DR

Building the test application works very similar to building the application itself, you only need to make sure that the base test toolkit is available. Running them automatically from an extension currently is a bit more tricky, but can be achieved by calling a Codeunit which in turns calls the test cases. Through some XML manipulation you can then get the results in TFS and – if necessary – directly link the failure to a new bug WorkItem.

Building your test code, running it and getting the results

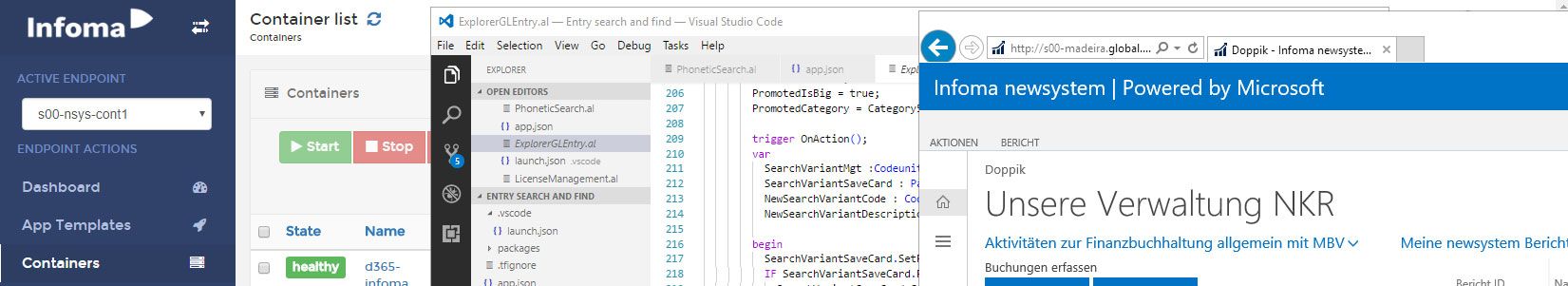

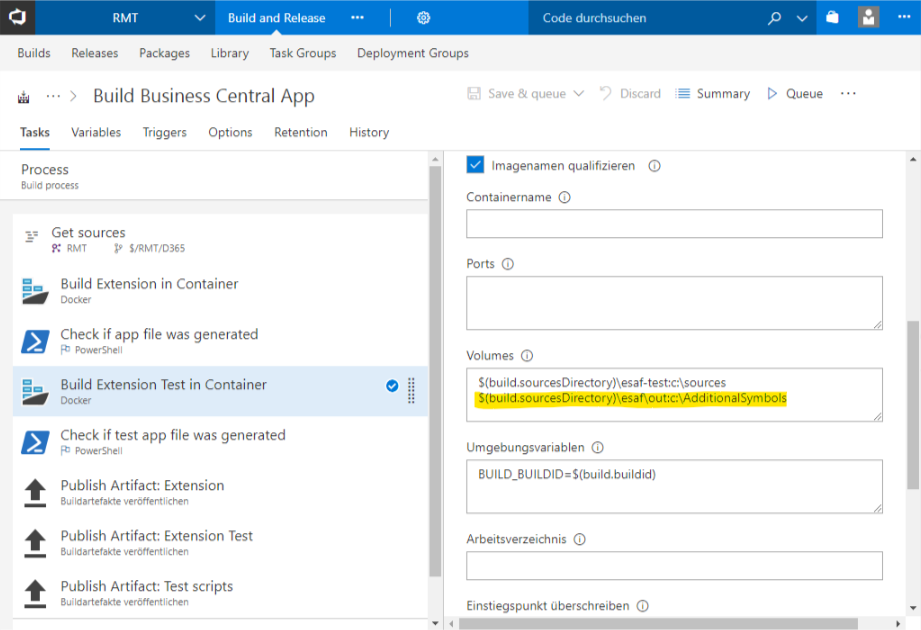

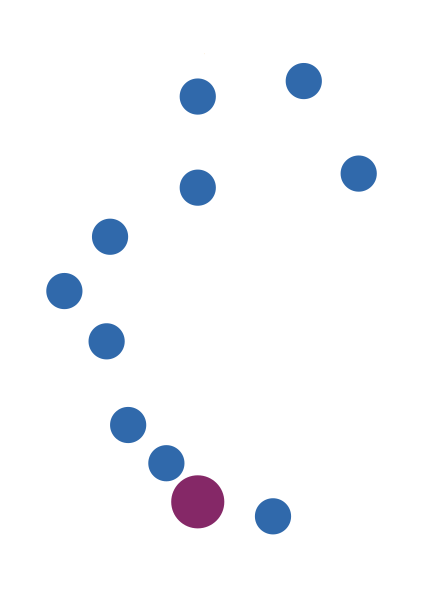

I’ve written about automatically building the extension itself and building the test extension is not too different: The test extension depends on the main extension and on the test toolkit. Therefore the build container needs to import the test toolkit and reuse the built extension from the first build step. As is very often the case, while I don’t use Microsoft’s navcontainerhelper1, it is an invaluable source of scripts to achieve what you need and it has it’s own cmdlet for importing the test toolkit, called Import-TestToolkitToNavContainer. With that, coding the import is easy. We also already have published the built extension as artifact, so we only need to link that into the new container used to build the test extension. On a quick tangent: I could also re-use the same container to build both extensions, but I prefer a clean build environment for every step which is very easily done with containers. My build process now looks like this:

As you can see I am mapping the out-Folder of the first build to c:\AdditionalSymbols in the second build. With that in place I can easily reference those in my build script

param

([string]$signingPwd)

Import-Module c:\build\Convert-ALCOutputToTFS.psm1

$AdditionalSymbolsFolder = 'C:\AdditionalSymbols'

$ALProjectFolder = 'C:\sources'

$AlPackageOutParent = Join-Path $ALProjectFolder 'out'

$ALPackageCachePath = 'C:\build\symbols'

$ALCompilerPath = 'C:\build\vsix\extension\bin'

$ExtensionAppJsonFile = Join-Path $ALProjectFolder 'app.json'

$ExtensionAppJsonObject = Get-Content -Raw -Path $ExtensionAppJsonFile | ConvertFrom-Json

$Publisher = $ExtensionAppJsonObject.Publisher

$Name = $ExtensionAppJsonObject.Name

$ExtensionName = $Publisher + '_' + $Name + '_' + $ExtensionAppJsonObject.Version + '.app'

$ExtensionAppJsonObject | ConvertTo-Json | set-content $ExtensionAppJsonFile

Write-Host "Using Symbols Folder: " $ALPackageCachePath

Write-Host "Checking for additional symbols"

if (Test-Path (Join-Path $AdditionalSymbolsFolder "*.app")) {

Copy-Item (Join-Path $AdditionalSymbolsFolder "*.app") -Destination $ALPackageCachePath

}

Write-Host "Using Compiler: " $ALCompilerPath

$AlPackageOutPath = Join-Path $AlPackageOutParent $ExtensionName

if (-not (Test-Path $AlPackageOutParent)) {

mkdir $AlPackageOutParent

}

Write-Host "Using Output Folder: " $AlPackageOutPath

Set-Location -Path $ALCompilerPath

& .\alc.exe /project:$ALProjectFolder /packagecachepath:$ALPackageCachePath /out:$AlPackageOutPath | Convert-ALCOutputToTFS

if (-not (Test-Path $AlPackageOutPath)) {

Write-Error "no app file was generated"

exit 1

}

RegSvr32 /u /s "C:\Windows\System32\NavSip.dll"

RegSvr32 /u /s "C:\Windows\SysWow64\NavSip.dll"

Copy-Item C:\build\32\NavSip.dll C:\Windows\system32

Copy-Item C:\build\64\NavSip.dll C:\Windows\SysWOW64\

RegSvr32 /s "C:\Windows\System32\NavSip.dll"

RegSvr32 /s "C:\Windows\SysWow64\NavSip.dll"

c:\build\signtool.exe sign /f 'C:\build\signcert.p12' /p $signingPwd /t http://timestamp.verisign.com/scripts/timestamp.dll $AlPackageOutPath

In lines 19 and 20 I copy all .app files in the AdditionalSymbols folder to my ALPackageCachePath. In line 29 I reference that when compiling my test application, so that it can find the necessary symbols. If you compare the script closely to the one I presented in my last blog, you will see that I am no longer incrementing the app version number based on the TFS build number. The reason for that is that the test app depends on the main app and if the main app would always get a new build number, I would also need to update the dependency which could be automated but would also mean a difference between what I have in source control and what actually gets built. The pro obviously is a direct link between the build and the result and also a unique result for every build but the described con outweighs that in my opinion. As always, your mileage may vary based on your requirements and preferences.

My script to create the build container got slightly more complicated as it now also needs to import the test toolkit (based on the navcontainerhelper code, lines 43-55) and then download the test symbols (lines 62 and 65), but the base flow didn’t change:

Write-Host "set up build environment"

Add-Type @"

using System;

using System.Net;

using System.Net.Security;

using System.Security.Cryptography.X509Certificates;

public class ServerCertificateValidationCallback

{

public static void Ignore()

{

ServicePointManager.ServerCertificateValidationCallback +=

delegate

(

Object obj,

X509Certificate certificate,

X509Chain chain,

SslPolicyErrors errors

)

{

return true;

};

}

}

"@

[ServerCertificateValidationCallback]::Ignore();

$pair = "autobuild:autopassword"

$bytes = [System.Text.Encoding]::ASCII.GetBytes($pair)

$base64 = [System.Convert]::ToBase64String($bytes)

$basicAuthValue = "Basic $base64"

$headers = @{ Authorization = $basicAuthValue }

$roleTailoredClientFolder = (Get-Item "C:\Program Files (x86)\Microsoft Dynamics NAV\*\RoleTailored Client").FullName

Import-Module "$roleTailoredClientFolder\Microsoft.Dynamics.Nav.Model.Tools.psd1" -wa SilentlyContinue

Write-Host "Create folders"

mkdir -Path "c:\ForBuildStage" | Out-Null

mkdir -Path "c:\ForBuildStage\symbols" | Out-Null

mkdir -Path "c:\ForBuildStage\vsix" | Out-Null

Write-Host "Import Test Toolkit"

Get-ChildItem -Path "C:\TestToolKit\*.fob" | foreach {

$objectsFile = $_.FullName

Import-NAVApplicationObject -Path $objectsFile `

-DatabaseName $databaseName `

-DatabaseServer $databaseServer `

-ImportAction Overwrite `

-SynchronizeSchemaChanges Force `

-NavServerName localhost `

-NavServerInstance NAV `

-NavServerManagementPort 7045 `

-Confirm:$false

}

$hostname = hostname

$release = $env:bc_release

Write-Host "Download symbols for $release"

$usURL = 'https://'+$hostname+':7049/NAV/dev/packages?publisher=Microsoft&appName=Application&versionText='+$release

$sysURL = 'https://'+$hostname+':7049/NAV/dev/packages?publisher=Microsoft&appName=System&versionText='+$release

$testURL = 'https://'+$hostname+':7049/NAV/dev/packages?publisher=Microsoft&appName=Test&versionText='+$release

Invoke-RestMethod -Method Get -Uri ($usURL) -Headers $headers -OutFile 'c:\ForBuildStage\symbols\Application.app'

Invoke-RestMethod -Method Get -Uri ($sysURL) -Headers $headers -OutFile 'c:\ForBuildStage\symbols\System.app'

Invoke-RestMethod -Method Get -Uri ($testURL) -Headers $headers -OutFile 'c:\ForBuildStage\symbols\Test.app'

Write-Host "Copy vsix as zip"

$vsixFile = (Get-ChildItem -Path C:\inetpub\wwwroot\http -Filter "al*.vsix")[0]

Rename-Item $vsixFile.FullName -NewName ($vsixFile.Name+'.zip')

Copy-Item -Path ($vsixFile.FullName+'.zip') 'C:\ForBuildStage\vsix'

Copy-Item -Path 'c:\run\my\build.ps1' c:\ForBuildStage

Copy-Item -Path 'c:\run\my\Convert-ALCOutputToTFS.psm1' c:\ForBuildStage

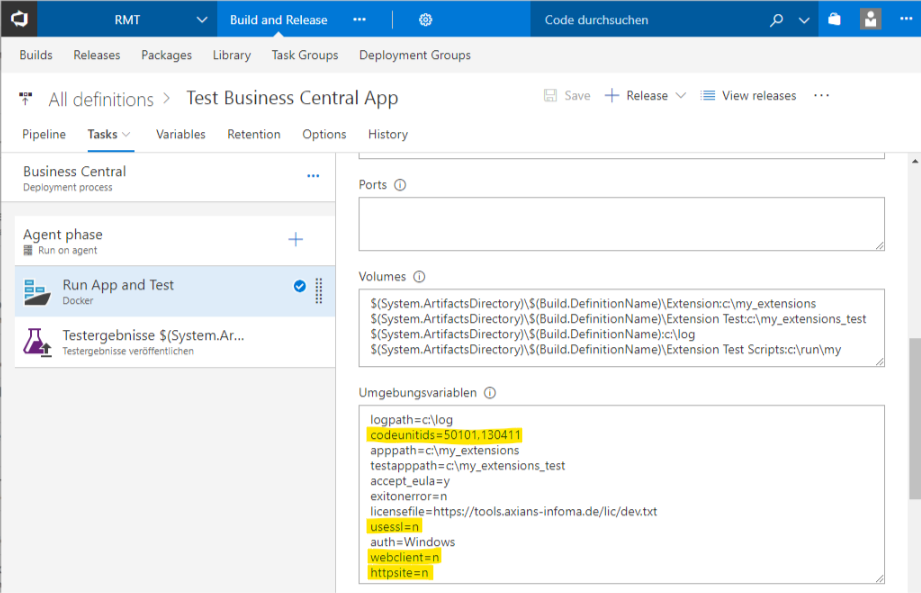

With that in place we can now call the test code. I again wanted to do that in a fresh container to make sure it is a pristine environment and now artifacts from the build influence the actual test. I am using a release process in TFS which references the results of my build process (see „Volumes“) and uses a script to call the codeunits. To be flexible, I share the codeunit ids through environment variables (German „Umgebungsvariablen“2) and I also deactivate everything I don’t need like ssl, the web client or the http download site in order to start the container as quickly as possible

After container startup3 my test script kicks in

$roleTailoredClientFolder = (Get-Item "C:\Program Files (x86)\Microsoft Dynamics NAV\*\RoleTailored Client").FullName

Import-Module "$roleTailoredClientFolder\Microsoft.Dynamics.Nav.Model.Tools.psd1" -wa SilentlyContinue

$serviceFolder = (Get-Item "C:\Program Files\Microsoft Dynamics NAV\*\Service").FullName

Import-Module "$serviceFolder\Microsoft.Dynamics.Nav.Apps.Management.psd1" -wa SilentlyContinue

New-NAVServerUser -WindowsAccount (whoami) NAV

New-NAVServerUserPermissionSet -PermissionSetId SUPER -WindowsAccount (whoami) NAV

Write-Host "Import all extensions from ${env:apppath}"

Get-ChildItem $env:apppath -Filter "*.app" | ForEach-Object {

$ext = Publish-NAVApp -ServerInstance NAV -Path $_.FullName -PassThru

Sync-NAVApp -ServerInstance NAV -Name $ext.Name -Tenant default

Install-NAVApp -ServerInstance NAV -Name $ext.Name -Tenant default

}

Write-Host "Import all extensions from ${env:testapppath}"

Get-ChildItem $env:testapppath -Filter "*.app" | ForEach-Object {

$ext = Publish-NAVApp -ServerInstance NAV -Path $_.FullName -PassThru

Sync-NAVApp -ServerInstance NAV -Name $ext.Name -Tenant default

Install-NAVApp -ServerInstance NAV -Name $ext.Name -Tenant default

}

Write-Host "Import test toolkit"

Get-ChildItem -Path "C:\TestToolKit\*.fob" | ForEach-Object {

$objectsFile = $_.FullName

Import-NAVApplicationObject -Path $objectsFile `

-DatabaseName $databaseName `

-DatabaseServer $databaseServer `

-ImportAction Overwrite `

-SynchronizeSchemaChanges Force `

-NavServerName localhost `

-NavServerInstance NAV `

-NavServerManagementPort 7045 `

-Confirm:$false

}

Write-Host "Invoke auto test CUs ${env:codeunitids}"

$companyname = (Get-NAVCompany NAV)[0].CompanyName

Invoke-NAVCodeunit -ServerInstance NAV -CompanyName $companyname -CodeunitId 50104 -MethodName RunTestCodeunitsCS -Argument $env:codeunitids

Write-Host "Get Results"

$hostname = (hostname)

$proxy = New-WebServiceProxy -Uri "http://${hostname}:7047/NAV/WS/$companyname/Codeunit/TestHandling" -UseDefaultCredential

$proxy.GetResultsForCodeunitsCS($env:codeunitids) | Out-File "${env:logpath}\result.xml" -Encoding utf8

It again imports the test toolkit and then calls my own extension codeunit to register and run the test codeunits (line 39). That one does a couple of things, but mainly it registers the test codeunits by calling the standard CAL Test Runner codeunit after setting publish mode (lines 39 and 40) and then it again calls it after setting test mode (lines 53 and 54). I am not 100% convinced that this is indeed the supposed way to do achieve my goal, please leave a comment or get in contact otherwise if you know of a better way.

procedure RunTestCodeunits(codids: List of [Integer])

var

i: Integer;

AllObjWithCaption: Record AllObjWithCaption;

TestLineNo: Integer;

CALTestLine: Record "CAL Test Line";

codid : Integer;

begin

Init();

Clear(CALTestLine);

foreach codid in codids do begin

AllObjWithCaption.Get(AllObjWithCaption."Object Type"::Codeunit, codid);

AddTests('DEFAULT',AllObjWithCaption."Object ID",TestLineNo);

TestLineNo := TestLineNo + 10000;

end;

RunTests('DEFAULT');

end;

local procedure AddTests(TestSuiteName : Code[10]; TestCodeunitId : Integer; LineNo : Integer)

var

CALTestLine : Record "CAL Test Line";

CALTestRunner : Codeunit "CAL Test Runner";

CALTestManagement : Codeunit "CAL Test Management";

begin

WITH CALTestLine DO BEGIN

IF TestLineExists(TestSuiteName,TestCodeunitId) THEN

EXIT;

INIT;

VALIDATE("Test Suite",TestSuiteName);

VALIDATE("Line No.",LineNo);

VALIDATE("Line Type","Line Type"::Codeunit);

VALIDATE("Test Codeunit",TestCodeunitId);

VALIDATE(Run,TRUE);

INSERT(TRUE);

CALTestManagement.SETPUBLISHMODE();

CALTestRunner.Run(CALTestLine);

end;

end;

local procedure RunTests(TestSuiteName : Code[10])

var

CALTestLine : Record "CAL Test Line";

AllObj : Record AllObj;

CALTestRunner : Codeunit "CAL Test Runner";

CALTestManagement : Codeunit "CAL Test Management";

begin

CALTestLine."Test Suite" := TestSuiteName;

CALTestManagement.SETTESTMODE();

CALTestRunner.Run(CALTestLine);

end;

After the tests have run, we need a way to get to the results and share them with TFS. Kamil Sacek has shared a lot of extremely helpful snippets and scripts in that area and my code to create a nunit result is heavily inspired by his scripts. As we now have good XML support in AL and I needed to make a few minor tweaks to his code, I decided to not do it in PowerShell but instead create a codeunit for it. I just goes through the results and outputs them as XML:

procedure GetResultsForCodeunits(codids: List of [Integer]): Text

var

doc: XmlDocument;

dec: XmlDeclaration;

run: XmlElement;

suite: XmlElement;

tcase: XmlElement;

failure: XmlElement;

message: XmlElement;

stacktrace: XmlElement;

callstack: Text;

testresults: Record "CAL Test Result";

search: Text;

node: XmlNode;

TempBlob: Record TempBlob Temporary;

outStr: OutStream;

inStr: InStream;

codid: Integer;

resultText: Text;

i: Integer;

timeDuration: Time;

begin

doc := XmlDocument.Create();

dec := XmlDeclaration.Create('1.0', 'UTF-8', 'no');

doc.SetDeclaration(dec);

// create the root test-run, data will be updated later

run := XmlElement.Create('test-run');

run.SetAttribute('name', 'Automated Test Run');

run.SetAttribute('testcasecount', '0');

run.SetAttribute('run-date', Format(Today(), 0, '<year4>-<month,2>-<day,2>'));

run.SetAttribute('start-time', Format(CurrentDateTime(), 0, '<hours,2>:<minutes,2>:<seconds,2>'));

run.SetAttribute('result', 'Passed');

run.SetAttribute('passed', '0');

run.SetAttribute('total', '0');

run.SetAttribute('failed', '0');

run.SetAttribute('inconclusive', '0');

run.SetAttribute('skipped', '0');

run.SetAttribute('asserts', '0');

doc.Add(run);

// get results for all requested Codeunits

foreach codid in codids do begin

testresults.SetFilter("Codeunit ID", FORMAT(codid));

if (testresults.Find('-')) then

repeat

with testresults do begin

// check if test-suite already exists and create it if not

search := StrSubstNo('/test-run/test-suite[@name="%1"]', "Codeunit Name");

if not run.SelectSingleNode(search, node) then begin

suite := XmlElement.Create('test-suite');

suite.SetAttribute('name', "Codeunit Name");

suite.SetAttribute('fullname', "Codeunit Name");

suite.SetAttribute('type', 'Assembly');

suite.SetAttribute('status', 'Passed');

suite.SetAttribute('testcasecount', '0');

suite.SetAttribute('result', 'Passed');

suite.SetAttribute('passed', '0');

suite.SetAttribute('total', '0');

suite.SetAttribute('failed', '0');

suite.SetAttribute('inconclusive', '0');

suite.SetAttribute('skipped', '0');

suite.SetAttribute('asserts', '0');

run.Add(suite);

end;

// create the test-case

tcase := XmlElement.Create('test-case');

case Result of

Result::Passed:

begin

resultText := 'Passed';

incrementAttribute(suite, 'passed');

incrementAttribute(run, 'passed');

end;

Result::Failed:

begin

resultText := 'Failed';

incrementAttribute(suite, 'failed');

incrementAttribute(run, 'failed');

suite.SetAttribute('status', 'Failed');

run.SetAttribute('status', 'Failed');

failure := XmlElement.Create('failure');

tcase.Add(failure);

message := XmlElement.Create('message', '', "Error Message");

failure.Add(message);

"Call Stack".CreateInStream(inStr, TextEncoding::UTF8);

callstack := inStrToText(inStr);

stacktrace := XmlElement.Create('stack-trace', '', callstack);

failure.Add(stacktrace);

end;

Result::Inconclusive:

begin

resultText := 'Inconclusive';

incrementAttribute(suite, 'inconclusive');

incrementAttribute(run, 'inconclusive');

end;

Result::Incomplete:

begin

resultText := 'Incomplete';

end;

end;

tcase.SetAttribute('id', Format("No."));

tcase.SetAttribute('name', "Codeunit Name" + ':' + "Function Name");

tcase.SetAttribute('fullname', "Codeunit Name" + ':' + "Function Name");

tcase.SetAttribute('result', resultText);

timeDuration := 000000T + "Execution Time";

tcase.SetAttribute('time', FORMAT(timeDuration, 0, '<Hours24,2><Filler Character,0>:<Minutes,2>:<Seconds,2>'));

suite.Add(tcase);

// increment parent counters

incrementAttribute(suite, 'testcasecount');

incrementAttribute(run, 'testcasecount');

end;

until testresults.Next() = 0;

end;

TempBlob.Blob.CreateOutStream(outStr, TextEncoding::UTF8);

doc.WriteTo(outStr);

TempBlob.Blob.CreateInStream(inStr, TextEncoding::UTF8);

exit(inStrToText(inStr));

end;

local procedure incrementAttribute(var xmlElem: XmlElement; attributeName: Text)

var

attribute: XmlAttribute;

attributeValue: Integer;

begin

xmlElem.Attributes().Get(attributeName, attribute);

Evaluate(attributeValue, attribute.Value());

xmlElem.SetAttribute(attributeName, format(attributeValue + 1));

end;

local procedure inStrToText(inStr: InStream): Text

var

temp: Text;

tb: TextBuilder;

begin

while not (inStr.EOS()) do begin

inStr.ReadText(temp);

tb.AppendLine(temp);

end;

exit(tb.ToText());

end;

To read those from TFS, I publish a WebService4 which calls the GetResultsForCodeunits method5 and stores the result in an XML file. Through a „publish test artifacts“ task which you can see in my release process (again, German „Testergebnisse veröffentlichen“) I let TFS know where to look for the test results and this causes the nice overview you see in the animated gif from the very beginning of this post.

With all of that in place you have a full cycle from creating a WorkItem, making code changes which reference that WorkItem, automatically trigger a build and then your tests. And if one of the tests fail, you can create a new bug WorkItem which references the failed task so that the developer working on that bug can easily go back and see which code change caused the failure and even why that code change was implemented. Pretty neat if you ask me!

Lessons learned or more details that you probably don’t care to know

If you stayed with me through all that text and code, I am duly impressed and you are either very bored or you really want to know how this is done, so I am sharing some lessons I have learned while implementing this:

- If you call a NAV WebService in a container and output the results, it seems like you get UTF-16 which confuses TFS even if you explicitly declare your xml file as UTF-16. The solution for that is to explicitly encode your output as UTF-8:

$proxy.GetResultsForCodeunitsCS($env:codeunitids) | Out-File "${env:logpath}\result.xml" -Encoding utf8 - Invoke-NAVCodeunit uses Windows authentication. That is not a problem in a container but you need to make sure the current user inside the container is a valid NAV user and has proper permissions by calling the appropriate cmdlets

New-NAVServerUser -WindowsAccount (whoami) NAV New-NAVServerUserPermissionSet -PermissionSetId SUPER -WindowsAccount (whoami) NAV

- If you only set the name attribute on test-suites and test-runs in a nunit result file you get a weird error that you need to set the AutomatedTestName. It took me a while to figure out that I could solve this by also setting the fullname attribute6

- Calling the CAL Test Runner’s Run method always seems to invoke all codeunits in a test suite, even if you set a codeunit id

There probably were a couple more but as this took me some days7 I no longer remember all the head scratchers and wall bangers to get this to work. But in the end, now it does work 🙂 If you have improvements to my scripts, please go to https://github.com/tfenster/automated-al-testing and either create an issue or a pull request.

- I’ve already learned my way around Docker before NAV adopted it and I like to stay close to the original tools for advanced use cases like Docker compose, swarm or maybe even Kubernetes in the future. But if you are starting with Docker or only plan to use it with NAV, please use navcontainerhelper as it makes your live a lot easier for all the important scenarios

- TFS seems to create the labels according to the locale which is active when creating the release process

- note that this no longer is my reduced build container but a standard NAV container as I now need a database and NAV server for my tests to run

- which involves more code than it should in my opinion, but I’ve created an issue on the AL Github repo for that

- actually it calls a wrapper as I can’t call a method with a List of Integer as param, but that is trivial

- this actually is the only meaningful change I made to Kamil’s excellent script apart form moving it from PowerShell to AL

- or actually mostly nights